March 6, 2024

OpenFin + AWS Bedrock

Despite not being specifically positioned as an AI solution, we often engage in conversations with customers about AI — showcasing OpenFin's versatility and appeal. OpenFin Workspace also has no data nor any underlying apps so in terms of standard paths for GenAI ideation, we might have been overlooked. We set out to explore how GenAI could interface with Workspace providing firms and users with a flexible, dynamic interface for GenAI tools.

As AWS Partners we chose to explore Bedrock’s GenAI service.

Set up and initial testing were a breeze, with transparent communication on any associated costs for usage—particularly important given the resource-intensive nature of GenAI experimentation. Bedrock has the added benefits of being a single API to access multiple Foundational Model (FMs) and being serverless which improved our delivery time. Using their well-documented APIs, we connected Bedrock to Workspace. To create our test environment we loaded up a fresh Workspace using our publicly available starter kit: https://github.com/built-on-openfin/workspace-starter.

AI Prompts

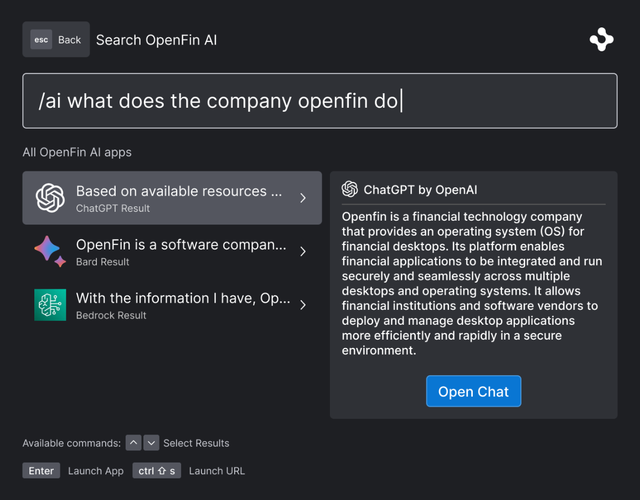

Starting with prompts. A key feature of our Home component is our Search bar. Beyond searching for apps and data inside our sample Workspace, Home became the Command Line Interface (CLI) for Bedrock. We used AWS’s Titam FM given it enables text summarization, natural language Q&A and extraction. We used the pre-trained data and our test user can ask natural language questions to Bedrock with results appearing directly in the results card in Home.

Connecting other LLMs (third-party and internal) to Home is easy. With our embedded search filters, our test user can select which model to use when more than one service is connected to Home. One UI, multiple LLMs.

With many mainstream vendors providing app specific GenAI tools and the rise of more AI solutions, it’s easy to imagine a single user needing to navigate dozens of co-pilots/prompts/chat interfaces and enduring more complexity not less with the adoption of AI. A single interoperable CLI solves that problem.

Furthermore, a single UI with Search and Launch allows users to initiate workflows and dive deeper into GenAI-generated insights. This empowers users to work with AI tools, not be replaced by them.

AI Workflows: Content Summaries and Extraction

From prompts, we shift to content. Models require content to summarize and extract insights from. For certain use cases a universal corpus is appropriate but for others leveraging internal and proprietary content is needed. For this, OpenFin offers another set of tools.

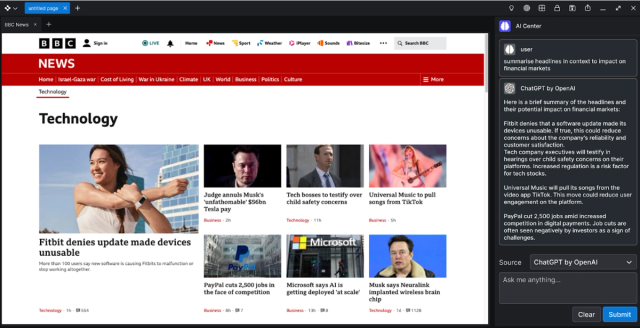

Web Apps easily load into our Browser.

Behind the scenes our Message Bus enables client-side messaging and Channels enable two-way, secure communication across a selection of 2 or more specific apps. We built a complimentary AI widget and used Channels to sync it with another desktop app with content. Our test users could now generate summaries of content from this app or any web app in Workspace Browser.

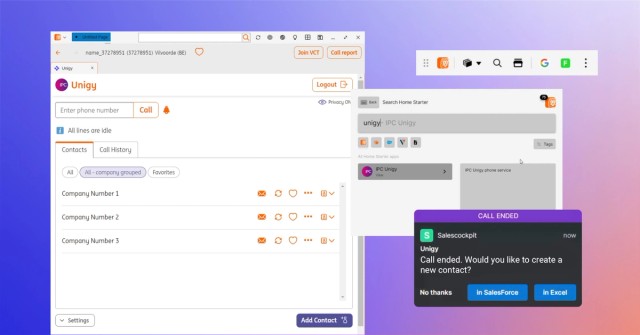

AI on the Glass: Native Apps and Multiple Apps

Using our .net adapter, we connected a native desktop app with this AI flow so even legacy apps can be connected to our AI widget. With Channels, we connected two apps (in our case a news and a blotter) to our Widget.

The Result: Instead of providing a summary of all the news in our app, we received a summary of the news relevant to our list of securities. This on-the-glass AI experience opens up a range of personalization and customer service workflows.

The best part... The total work building on top of our workspace starter & initializing the Bedrock APIs was approximately one week of development time from one engineer. Achieving these positive outcomes in our GenAI exploration, led to the integration of Bedrock into our sales demo environment. We’ve been pleasantly surprised by clients’ reactions to the simplicity and flexibility of implementing GenAI Workflows with OpenFin Workspace.

Want to see OpenFin in Action?

Interested in continuing the conversations around the simplicity and flexibility of implementing GenAI Workflows with OpenFin Workspace - Let's chat. Set up a demo with an OpenFin expert today.

Enjoyed this post? Share it!

Related Posts

All Posts ->

Featured

Enhanced Deployment Flexibility with OpenFin's Fallback Manifests

Thought Leadership

Featured

ING Integrates OpenFin for Salesforce to Optimize Workflows

Thought Leadership